Cell-Li-Gent

🔋Tinkering on neural networks for battery modeling and agent-based operating adventures 🚀

What is it about?

Cell-Li-Gent is a cutting-edge project focused on data-driven battery modeling and state estimation using deep learning. It also is intended to explore battery operating strategies utilizing reinforcement learning (RL) agents. The primary goal is to simplify battery modeling, reduce expensive tests, and enable the prediction of arbitrary parameters to improve control and operation.

Why bother?

I see huge potential in gradient-based curve fitting. Battery modeling within the operating range could become exhaustive, simplifying the problem to binary classification or anomaly detection beyond this range.

As the renowned physicist Edward Witten mentioned regarding string theory, once a robust theory is established, it becomes too valuable to ignore. I believe the same applies to current backpropagation techniques and high-dimensional curve fitting. With significant successes in diverse fields like image compression [1], weather forecasting [2], self-driving cars [3], high-fidelity simulations with DeepONet [4] and PINNs [5], protein folding [6], AlphaGo [7] and AlphaTensor [8] as agentic systems and NLP like ChatGPT/LLama3 [9], this approach promises remarkable results.

Moreover, advancements in traditional modeling and physics directly enhance this data-driven approach by improving data quality, leading to mutual benefits across domains. This method also promises to:

- Decrease development and inference time ⏳

- Lower requiered expertise for modeling

- Save costs 💵

- Reduce tedious tasks, improving employee well-being 😊

What is the market?

- Everything with a battery: power grid technology, renewables, electric vehicles (EVs), etc. 🔋

- Battery Management Systems (BMS): low-level and control systems

- State estimation

- Operation/charging strategy

- High-fidelity physics-based simulation (Finite Element, etc.)

What is the catch?

- The field remains largely empirical 📉

- High compute requirements become expensive 💵

What is the plan?

Stage 1:

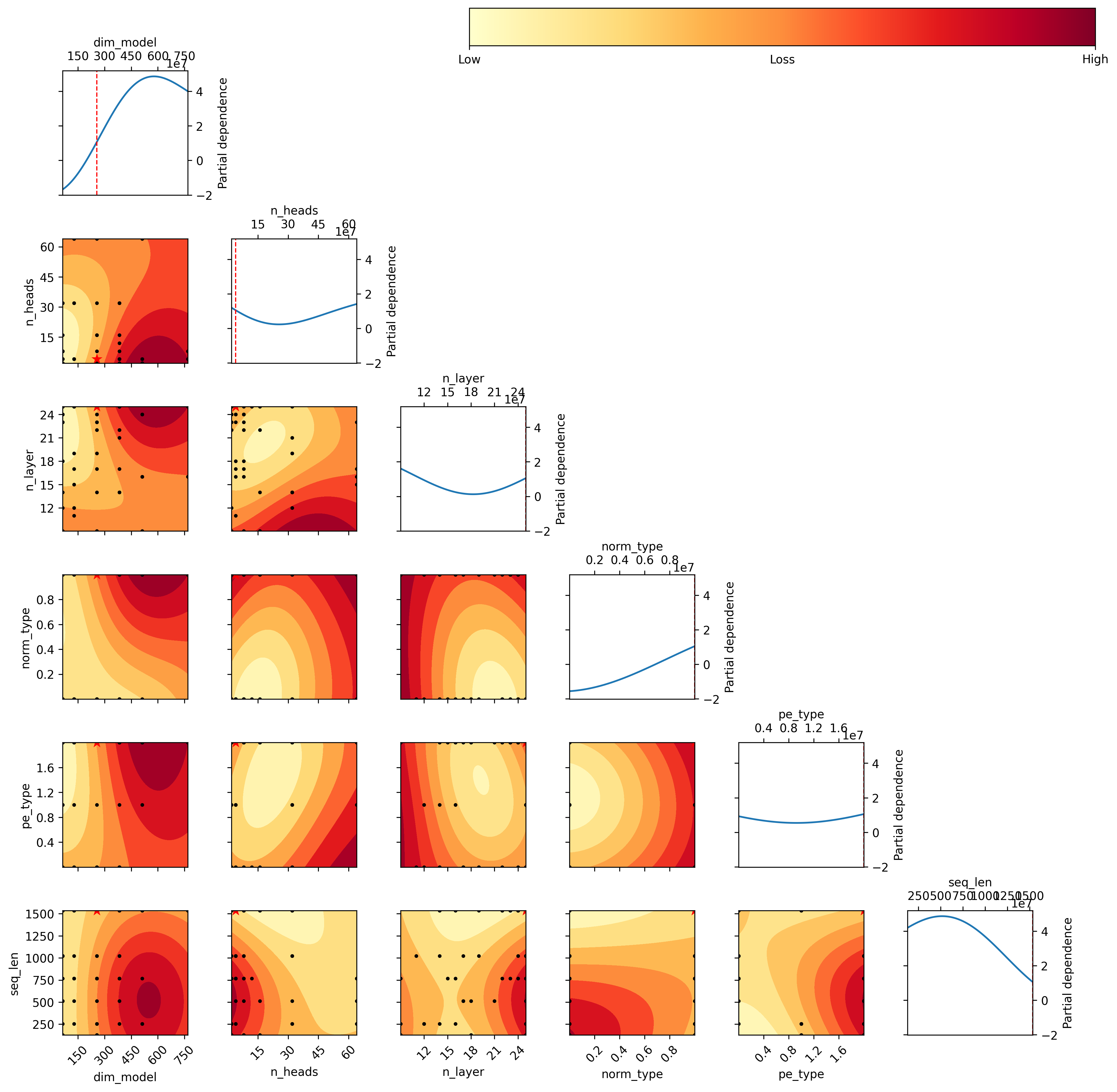

- Conduct extensive hyperparameter optimization (HPO) and explore state-of-the-art (SOTA) architectures for multivariate time series prediction.

- Develop a reduced-order model that balances between ECM and DFN models, improving fit and simplifying testing with arbitrary output parameters.

Stage 2:

- Analyze and implement RL agents for optimizing operating and charging strategies.

- Optimize the model for real-time capability, whether embedded or remote/cloud-based.

- Train the model on various chemistries to create a meta-foundation model requiring minimal fine-tuning for new cell chemistry models.

Future Stages:

- Explore ASIC development and 3D modeling.

- Discover partial differential equations (PDEs) that improves describing the system.

Who are you?

I am a passionate engineer who enjoys making predictions and pushing the boundaries of applied science. My goal is to translate innovative concepts into impactful products that contribute to a better tomorrow. I balance my “nerdy” interests with activities like socialising 😂, traveling, kite surfing, enduro riding, bouldering, calisthenics, and enjoy listening to electronic music. Though I collected formal education certificates, much of my expertise is self-taught through curiosity and a drive for innovation. I believe in sharing knowledge and rapid iteration to foster progress.

Feel free to connect, support or chat: E-Mail | X | LinkedIn 🤝

Recent Progress:

Cell-Li-Gent, April 2024 - July 2024:

See project repository for details.

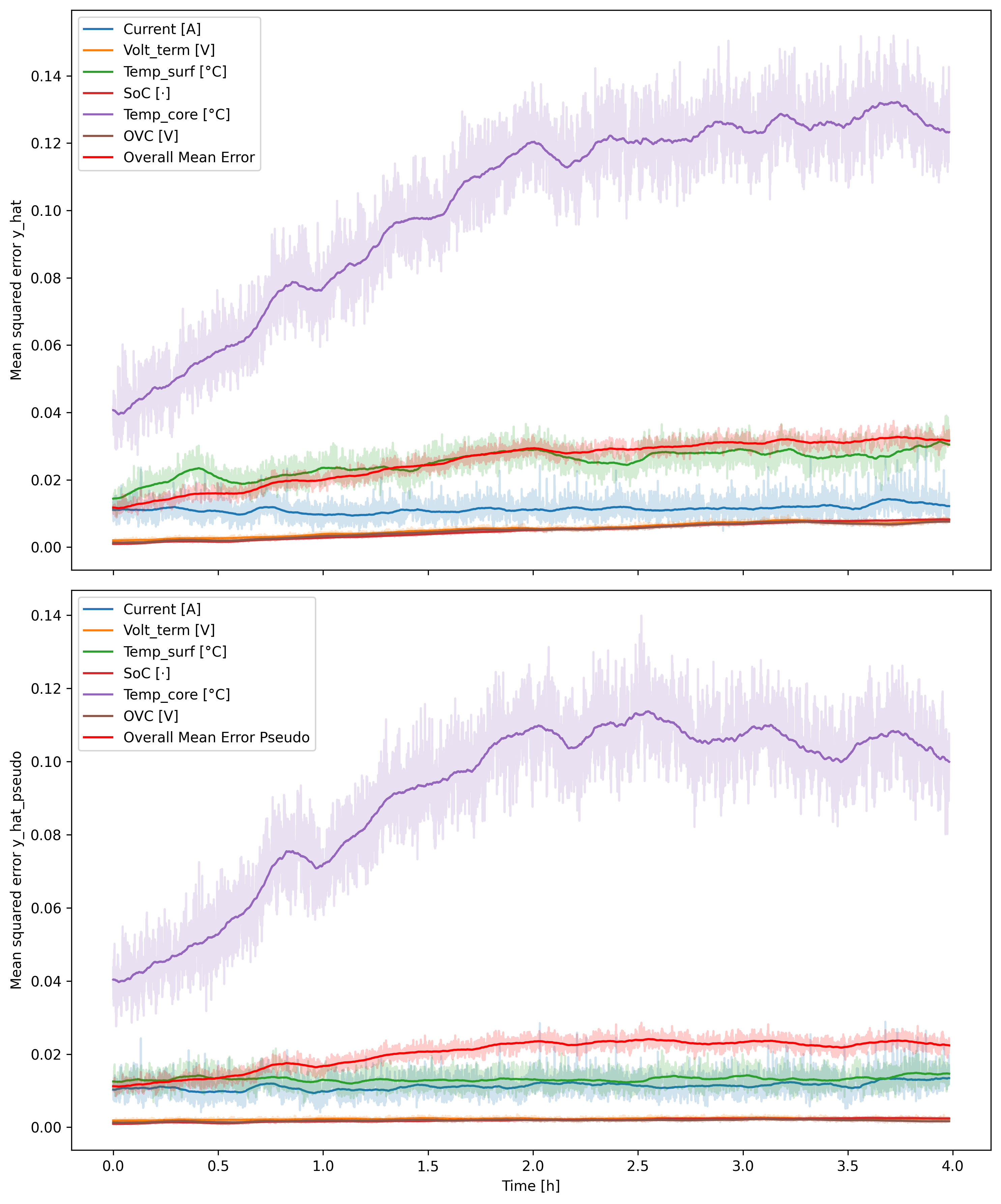

- Error over time analysis

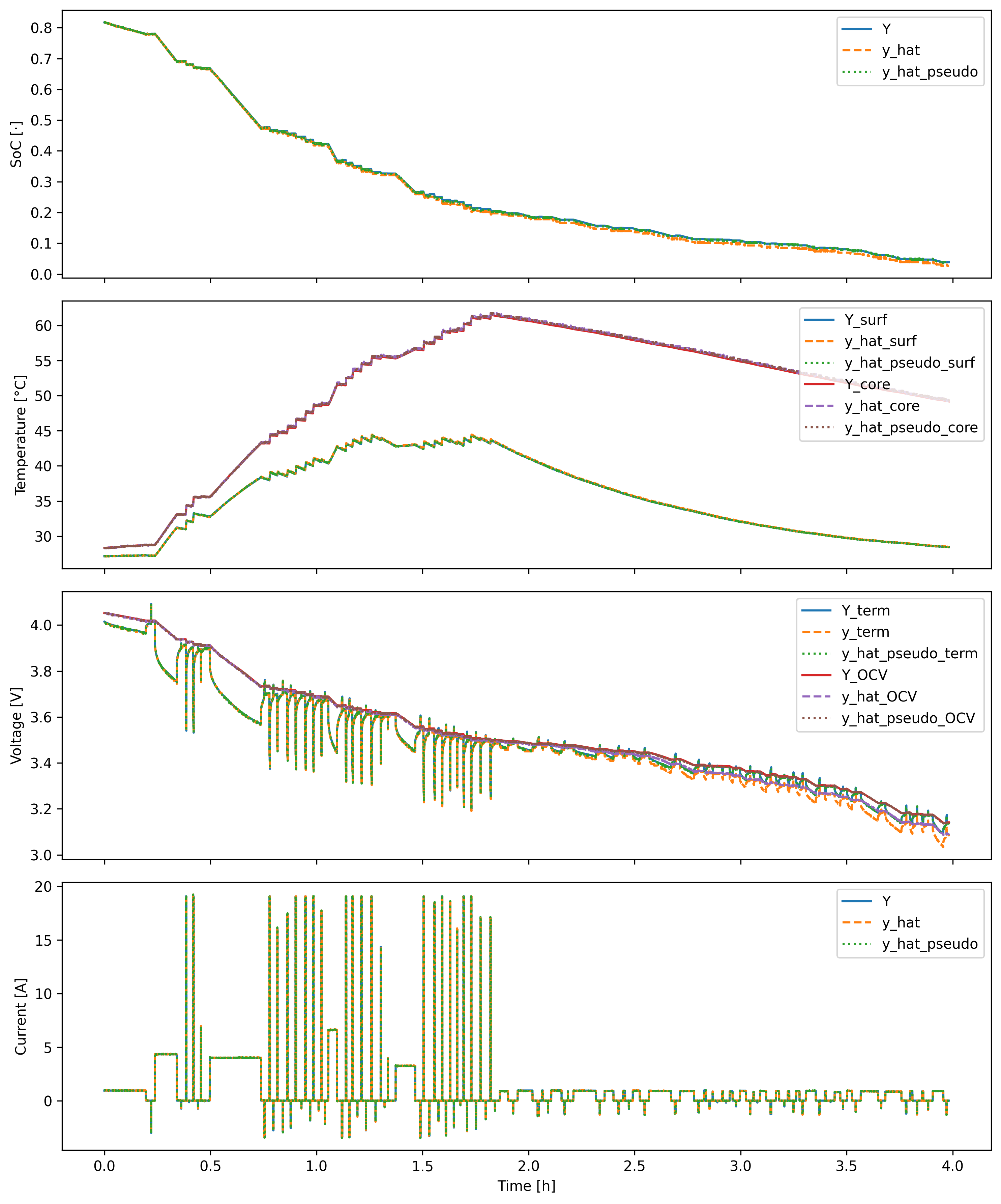

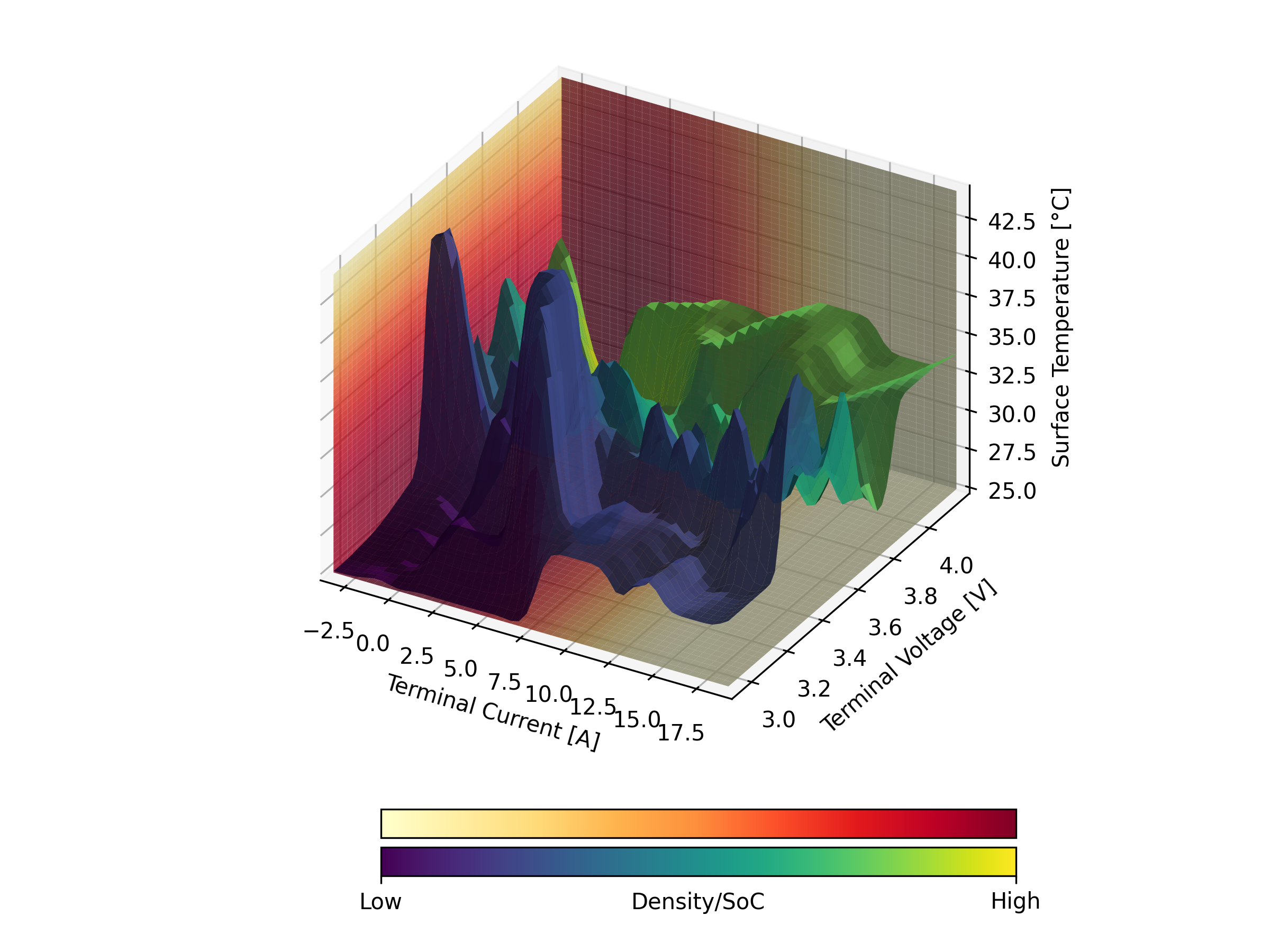

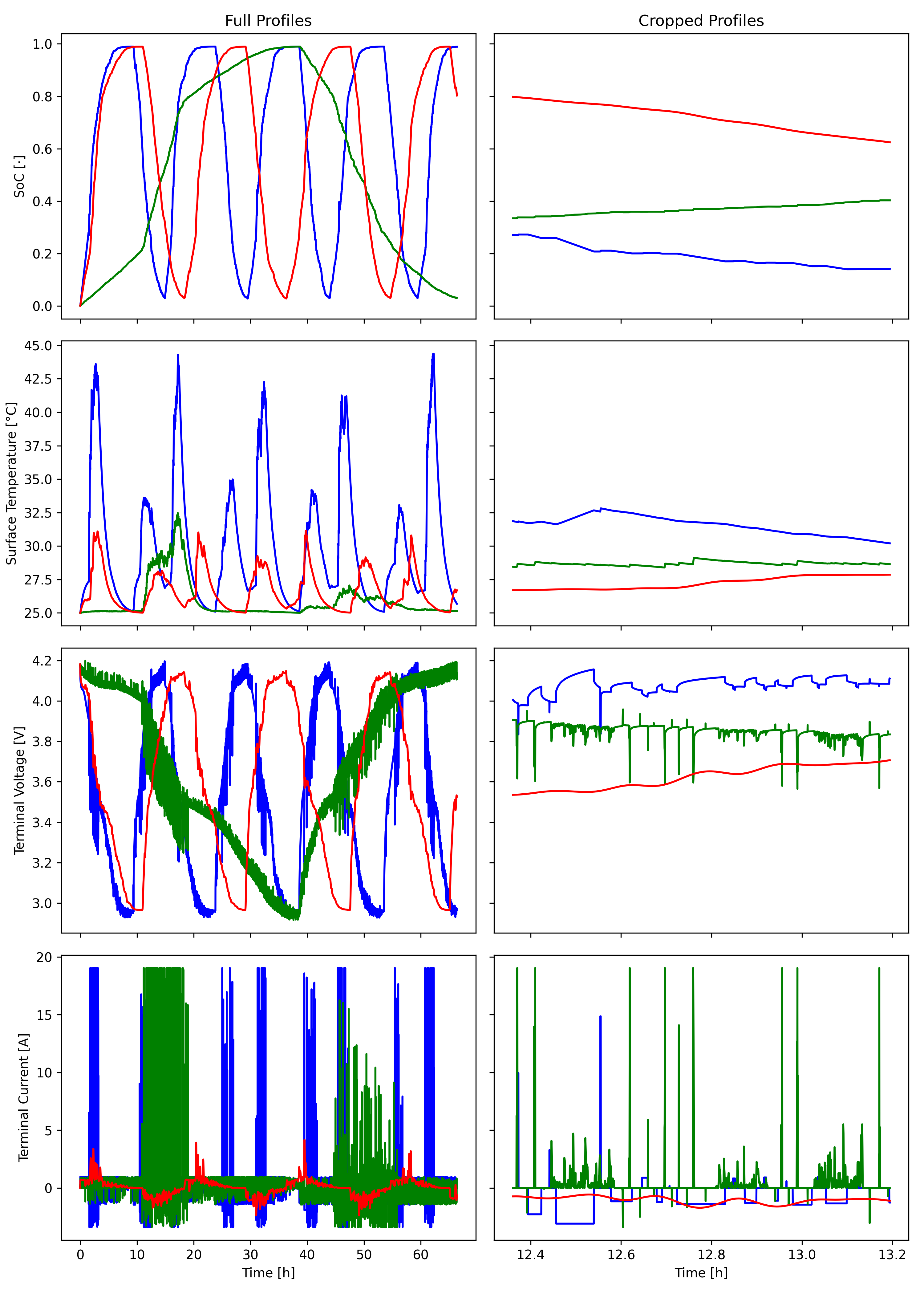

- Evaluated model outputs on synthetic datasets generated with PyBaMM

SPMe and default parameters.

- Conducted HPO runs for Transformer (and Mamba) models.

- Implemented Transformer and Mamba model.

- Setup an SPMe model with a lumped thermal model for core and surface temperature.

- Generated synthetic datasets within datasheet limits.

Thesis:

See thesis for details.

- Initial benchmark and meta learning approach, including lab data, with limited compute resources and expertise 😅.

Arbitrary tools and technology tags:

- PyTorch

- Single node training

- Python process management for freeing CUDA memory

- PyBaMM with a lumped thermal model for core/surface temperature [10]

- H5 database with parallel reads

- SOTA Llama3 architecture from scratch with various positional encodings (Absolute, ALiBi, RoPE) [9], [11], [12], [13]

- Tailoring SOTA reasearch models (Mamba, etc.) to multivariate time series domain [14]

- Feature-wise loss

- Incorporating Spectrogram STFT data transformation as additional input encoding

- NeoVim